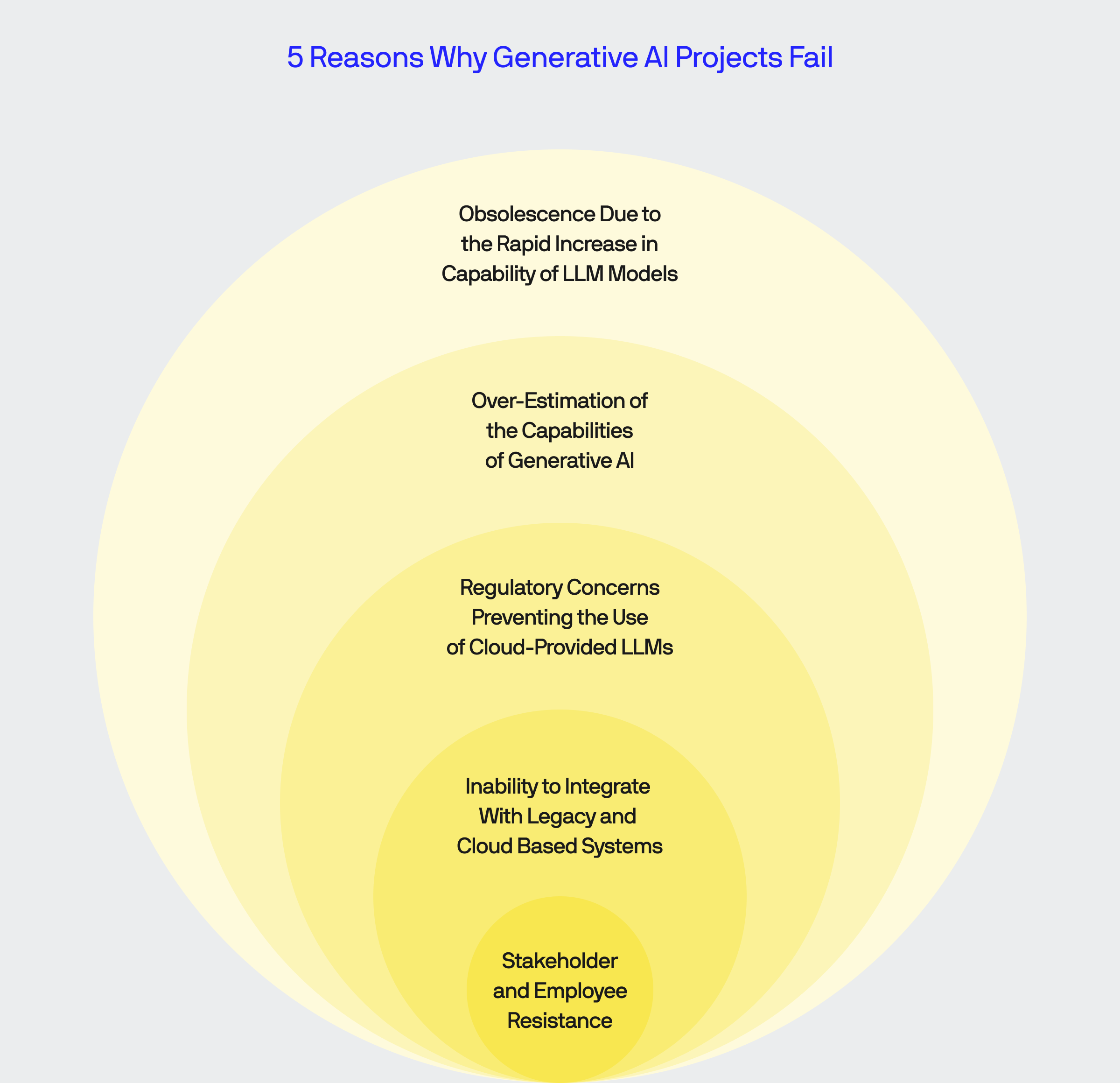

5 Reasons Why Enterprise Generative AI Projects Fail and How to Avoid Them

With the emergence of generative AI, there has been a rapid push from organizations, from startups to enterprises, to take advantage of large language models (LLMs) to automate business processes and create AI-enabled products. However, much like any ground breaking technology, the ability to successfully deliver on the promises of generative AI has proven challenging. The Harvard Business Review projects that almost 80% of generative AI projects end in failure.

In my work at BlueLabel, a full-service generative AI consultancy, I have had the chance to work on a number of projects over the past 2 years that aimed to adapt generative AI within products and business processes. While my own experience suggests that the 80% number might be overly negative, I have seen a number of initiatives fail to get off the ground. While many projects fail due to any number of organizational and project management factors not specifically related to generative AI, there are certain unique challenges that I have come to witness that are specific to generative AI that make these projects particularly challenging.

In this blog post, I will outline the 5 classes of issues that I’ve seen doom generative AI implementations and share some of my own guidance on how organizations can seek to avoid these mistakes in their own implementations.

1. Over-Estimation of the Capabilities of Generative AI

As the hype around the emergency of generative AI grows, so too does the mythology of the amazing feats of what these models can solve. Contrary to the hyperbolic fears of the emergence of Skynet, the current state of the art LLMs are orders of magnitude away from anything resembling the holy grail of AI: artificial general intelligence (AGI). The dream of AGI is that autonomous AI-agents can work to perform any number of tasks independently, which is a far cry from the current state of the art. While powerful, the capabilities of generative AI models at best supplement existing human workflows, they do not completely remove the human from the loop. To set realistic goals for a generative AI implementations it’s essential to understand the types of problems generative AI is well-suited to solve. These typically fall into the following categories: content generation, language translation and summarization, conversational agents, creative problem solving and data cleaning and analysis.

On the other hand, there are certain problem spaces which are not suited to generative AI and should be red flags for any project attempting. For example, generative AI projects that work in highly regulated or compliance critical industries should proceed very carefully as its likely that cloud based LLMs will not be suitable as data privacy and protection are paramount. The nature of LLMs is that while capable, they are unpredictable and quite often slow, which make them wholly unsuited for any application where accuracy and performance are paramount such as any real-time critical decision making software. Do not, under any circumstance, attempt to use generative AI as part of any manufacturing process or critical safety control software!

Identifying the Right and Wrong Problem Spaces for Generative AI

| Problem Spaces Suitable for Generative AI | Problem Spaces Not Suited for Generative AI |

|---|---|

| Content Generation: Writing articles, generating marketing copy, creating customer service scripts, drafting emails, producing art, enhancing photographs, generating design prototypes, creating music, voice synthesis, sound effects, generating animations, video summaries, and video effects. | Highly Regulated or Compliance-Critical Applications: Financial auditing, legal document drafting requiring precise compliance and deep expertise. |

| Language Translation and Summarization: Real-time translation of text and speech between multiple languages, condensing long documents, articles, or transcripts into concise summaries. | Real-Time Critical Decision-Making: Autonomous vehicles and medical diagnoses that require highly reliable and robust systems. |

| Conversational Agents: Providing customer support, answering FAQs, engaging users in dialogue, assisting with scheduling, reminders, and task management. | Sensitive and Confidential Data Handling: Applications involving PII or classified information requiring strict data privacy and security. |

| Personalization: Tailoring product suggestions, content recommendations, and personalized marketing, creating personalized content, experiences, and services based on user preferences. | Tasks Requiring Deep Domain Expertise and Human Judgment: Strategic business planning, complex narrative creative writing. |

| Creative Problem-Solving: Generating design concepts, architectural plans, and product prototypes, brainstorming new ideas, innovations, and creative solutions to complex problems. | Physical and Safety-Critical Systems: Manufacturing process control, critical infrastructure management. |

| Data Augmentation and Enhancement: Identifying and correcting errors or inconsistencies in datasets, creating synthetic data to augment existing datasets for training purposes. | Emotional and Social Intelligence: Human resources management, handling employee grievances, performance reviews, and customer service for sensitive issues. |

2. Inability to Integrate With Legacy and Cloud Based Systems

Almost all generative AI projects undertaken in a business setting involve the movement of data to and from a variety of data sources. Generative AI implementations are built upon data integration pipelines. One of the significant challenges in implementing generative AI projects is the difficulty of integrating with existing legacy systems, and even modern cloud providers, and extracting relevant data. For example, in one project we were looking to classify and summarize customer service data held in a proprietary, third-party CRM system with no API, which presented a foundational barrier to integrating it into a generative AI workflow. Data silos are not just limited to legacy systems, it is a rapidly growing trend as online data providers seek to protect their data from being used to train other people’s LLMs. In a example of broad industry trend towards data siloing, Reddit announced in 2023 updated its API terms and service to restrict and monetize the training of AI models from its data. Make no mistake, it is getting more difficult each day to pull data from 3rd party apps and services, a trend likely to continue in the future.

Solutions to Breaking Down Data Silos in Generative AI Projects

While the lack of an API or access to the underlying database of a line of business application do pose roadblocks to a generative AI implementation, they are not insurmountable. Organizations can adopt several strategies, including the use of Robotic Process Automation (RPA) tools as a means of exfiltrating data from legacy silos. At BlueLabel, we generally turn to UiPath’s set of enterprise grade RPA tools to help with this problem. Using something like UiPath, Automation Anywhere or, to a lesser extent, Microsoft’s Power Automate, you can extract data out of systems through UI automation. How this is works is that instead of calling an API to retrieve data from a 3rd party system, instead you can use an RPA which can login to the app through the UI and then proceed to manipulate a web app, much like a real person would do, to export data to a CSV file. Remember that example of customer service data locked in a CRM? We were able to overcome this obstacle through the use of a UiPath robot that would run periodically and essentially automate the pressing the ‘Export to CSV’ button in the UI, thereby getting the data we needed to complete our generative AI workflow. It’s important to note though while RPA is a technical solution to pierce a data silo, ensure you are mindful of the terms and conditions of the app you are performing the extraction on to ensure you do not violate your license or terms of use.

3. Regulatory Concerns Preventing the Use of Cloud-Provided LLMs

In many industries, regulatory and compliance issues create significant hurdles for the adoption of cloud-provided large language models (LLMs) such as ChatGPT. Organizations in the healthcare and financial sectors, in particular, must adhere to strict data privacy and security regulations. These regulations often mandate that sensitive data must remain within the organization’s IT systems and prohibit the use of this data to train or improve third-party AI models. This can pose a significant hurdle to any generative AI implementation within these industries and can often sideline a project before it has had the chance to start.

Solutions to Overcoming Regulatory Concerns in Generative AI Projects

To navigate these regulatory challenges, organizations can leverage the diverse ecosystem of open-source LLMs offered through platforms like HuggingFace. These models can be downloaded, adapted, and fine-tuned entirely within the organization’s IT environment, ensuring that sensitive data remains secure and compliant. Here’s how this approach can be beneficial:

- On-Premise Deployment: Open-source LLMs can be deployed on-premises, within the organization’s secure IT infrastructure. This ensures that data does not leave the organization’s controlled environment.

- Custom Training: Organizations can download, adapt, and fine-tune open-source models such as LLaMa using their data without sharing it with external parties. This maintains data privacy and ensures that proprietary information remains confidential.

- Regulatory Compliance: By using models that do not require data to be sent to third-party providers, organizations can meet regulatory requirements and avoid compliance issues.

- Flexibility and Control: Open-source models offer greater flexibility and control, allowing organizations to tailor the models to their specific needs and update them as required without relying on external providers.

However, it’s important to note that while some open source LLM models such as Mixtral and Meta’ LLaMa 3 are quite capable, they struggle to be competitive with the latest models from OpenAI. Generally, you should consider the best open source LLM models to be about a half-generation behind the state of the art offerings from OpenAI or Anthropic.

4. Obsolescence Due to the Rapid Increase in Capability of LLMs

The field of large language models (LLMs) is advancing at a breathtaking pace with new models with enhanced capabilities and improved performance being released at a blistering pace. While this rapid progress is exciting, it poses significant challenges for companies attempting to create generative AI powered apps. Projects can become obsolete before they are even completed, as newer, more capable models and tooling emerge from OpenAI and other providers. For example, from the introduction of GPT 4 in March 2023, an entire cottage industry of startups emerged looking to build solutions on top of GPT 4 by wrapping the base engine with a series of highly customized prompts. However, in November 2023, OpenAI introduced its custom GPT functionality that in a blink rendered entire swaths of these projects obsolete. While less relevant for organizations looking to use generative AI to automate business processes, for those looking to build apps and services with some sort of generative AI functionality within it there is a very real threat that these projects will be rendered obsolete even before they are finished.

Strategies for Managing Rapid Advancement

While the risk of obsolescence cannot truly be guarded against, there are strategies organizations can pursue to help manage and adapt to the rapid advancement of LLM capabilities.

- Modular Project Design: Develop AI projects in a modular fashion, allowing individual components to be updated or replaced without overhauling the entire system. This approach ensures that newer models can be integrated seamlessly as they become available.

- Continuous Learning and Adaptation: Establish a culture of continuous learning and adaptation within the organization. Encourage teams to stay informed about the latest developments in the field and be prepared to incorporate new advancements as part of an ongoing process.

- Vendor and Model Agnosticism: Maintain a vendor-agnostic and model-agnostic stance. By not committing to a single provider or model, organizations can more easily switch to newer, more advanced models as they become available. A good practice is that instead of building directly against OpenAI’s API layer, that development teams leverage intermediate frameworks such as LangChain and LlamaIndex to abstract the vendor specific APIs into a general programming model that allows you to remain relatively vendor and model agnostic.

5. Internal Stakeholder and Employee Resistance

One of the most significant obstacles to the successful completion of generative AI projects is resistance from internal stakeholders and employees. This resistance often stems from fear—fear of job loss, fear of being replaced by AI, and fear of the unknown. These concerns can create substantial barriers to AI adoption and hinder project progress. If not addressed, these concerns from the workforce can doom any generative AI implementation project due to a lack of buy-in, delays stemming from resistance to cooperate and under-utilization of any AI system deployed if employees do not understand or trust them.

Strategies for Addressing Internal Resistance Generative AI Initiatives

- Education and Training: One of the most effective ways to overcome resistance is through education. Providing comprehensive training sessions can help demystify AI and alleviate fears. Employees need to understand what generative AI is, how it works, and how it can enhance their roles rather than replace them.

- Workshops and Seminars: Conduct workshops and seminars to educate employees about AI, its capabilities, and its limitations.

- Hands-On Training: Offer hands-on training sessions where employees can interact with AI tools and see firsthand how they can aid in their daily tasks.

- Focus on Benefits to Day-to-Day Work: Shifting the conversation from job replacement to job enhancement can help mitigate fears. Highlight how AI can handle repetitive, mundane tasks, allowing employees to focus on more strategic, creative, and fulfilling aspects of their jobs.

- Efficiency and Productivity: Explain how AI can automate time-consuming processes, increasing overall efficiency and productivity.

- Support and Assistance: Emphasize AI’s role as a supportive tool that assists employees in making better decisions, providing insights, and enhancing their capabilities.

Conclusion

The rapid advancement of generative AI holds immense potential, but it also brings a set of challenges that can lead to project failures if not managed properly. From overestimating AI capabilities to dealing with integration issues, regulatory concerns, and internal resistance, these obstacles can be daunting. At BlueLabel, we have witnessed firsthand the pitfalls that many organizations face in their AI journeys. By sharing our insights and strategies, we hope to help others avoid these common mistakes and successfully harness the power of generative AI.

Bobby Gill