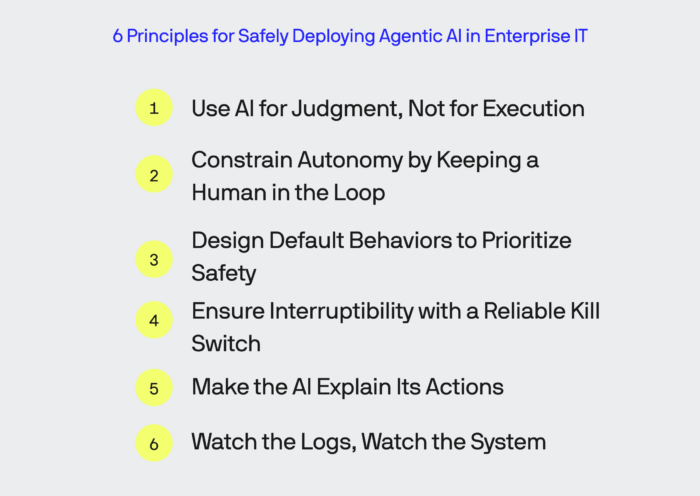

6 Principles for Safely Deploying Agentic AI in Enterprise IT

Agentic AI systems are thrilling to see in action in a prototype or development environment, but the thought of letting them run wild in a corporate IT production environment is downright terrifying. Time and again in our work at BlueLabel, I’ve seen people create truly innovative solutions with AI technologies, only to face resistance from IT departments when it comes time to deploy them. And, to be fair, the IT teams are right to push back. In environments where compliance, governance, and legacy systems are king, an unchecked AI isn’t just risky—it’s a disaster waiting to happen.

At BlueLabel, we’ve worked with enterprises to deploy agentic AI systems in production environments where precision, accountability, and adherence to governance frameworks aren’t optional. In this article, I’ll share some principles for going about designing and deploying agentic systems into corporate IT environments in a way that embraces good governance and compliance principles. These aren’t best practices, but rather actionable insights (sometimes learned very painfully!) into how agentic systems can be deployed safely and effectively in enterprise production environments.

Understanding Agentic AI

Agentic AI systems stand apart from traditional AI applications, like the now-ubiquitous Retrieval-Augmented Generation (RAG)-style chat apps, which use large language models (LLM) to answer questions or respond to prompts, put simply, they take action. Picture, if you will, a Access Management Agent integrated with Slack: a hypothetical system designed to handle user access requests while ensuring compliance with corporate policies. Unlike a RAG app that simply fetches policy documents or answers questions, this agentic system would independently evaluate requests, cross-reference them against corporate requirements, and decide whether to grant access or escalate for approval. It would seamlessly adapt to policy changes, orchestrate multi-step workflows (like notifying approvers and reminding forgetful managers), and handle ambiguities by asking clarifying questions or defaulting to the safest course of action. In theory, it sounds like a dream, that is until you realize just how much control you’d be handing over to an AI.

Now, propose this to an enterprise IT professional, and you might as well hand them a paper bag to breathe into and a (toy) gun to shoot you with. The mere thought of an AI frolicking through Active Directory, autonomously managing permissions, is enough to give even the most seasoned compliance officer a stress headache. “Who approved this? What did the AI do? Is it auditing itself, or have we unleashed an LDAP-enabled Skynet?” they might ask, with a wild look in their eyes. While this hypothetical Access Management Agent highlights the promise of agentic systems, it also lays bare the immense challenges of deploying them safely in corporate environments. The stakes are high, the risks are real, and the need for robust safeguards is non-negotiable. But, you have to admit, the idea of AI wrangling access requests is pretty enticing.

6 Principles for Safe Development and Deployment of Agentic Systems in Corporate IT Environments

1. Use AI for Judgment, Not for Execution

Not every task needs an agentic AI, and not every component of an agentic system needs to be AI driven. This isn’t just a philosophical stance—it’s a survival strategy. Large language models (LLMs), the backbone of many agentic systems, are notoriously non-deterministic. They’re brilliant at processing complex requests and navigating ambiguity, but they’re also prone to making unpredictable leaps of logic. For tasks where precision and repeatability are paramount—like granting access to critical systems—relying entirely on an AI is like trusting a fortune teller to balance your company’s books.

The key is to break the task into parts that genuinely require the AI’s adaptability versus those that can and should be handled with traditional, deterministic code. For example, in our hypothetical Access Management Agent, the AI shouldn’t be granted direct write access to Active Directory. Instead, it should interface with a well-defined programmatic API, which enforces internal processes and guidelines for access management. The AI might determine whether Bob from Marketing should have access to a shared folder based on policies, roles, and a dash of contextual flair, but the actual write operation, modifying permissions in AD, should be executed by traditional, predictable code.

This layered approach ensures the AI operates within a controlled framework, where its creative problem-solving is kept separate from the critical, compliance-heavy operations. It reduces risk, provides better auditability, and keeps your IT team from spiraling into existential dread every time the agent makes a decision. Start with the mantra: “AI for nuance, code for rules,” and you’ll be on the right track.

2. Constrain Autonomy by Keeping a Human in the Loop

Autonomy is great until it grants admin rights to the wrong person. For an agentic AI like the Access Management Agent, humans need to stay in the loop for decisions that actually matter. Let the AI handle routine tasks, like giving Tasha from Marketing access to the stock photo folder, but when it comes to financial systems or sensitive HR data, the agent should pause and pass the baton. The AI can recommend actions, but humans should have the final say where the stakes are high.

Building human-in-the-loop workflows isn’t as hard as it sounds. Platforms like Botpress or programming frameworks like LangGraph make it easy to create systems where the AI routes tricky decisions to Slack or email for human approval. The AI does the grunt work: evaluating policies, flagging edge cases, and teeing up decisions, but the human signs off. This isn’t a limitation; it’s good governance. If keeping a human in charge of critical decisions sounds old-school, remember: “trust, but verify” is a cliché for a reason.

3. Design Default Behaviors to Prioritize Safety

An agent without defaults is a loose cannon. When it doesn’t know what to do, it needs a clear rule: play it safe. If the Access Management Agent is unsure whether Bob from Marketing should have admin access (spoiler: he shouldn’t), it should stop and ask for help, not guess. Caution isn’t just a feature, it’s the difference between a helpful AI and one that accidentally emails the CEO’s calendar to the entire company.

Defaults should reflect common-sense priorities: compliance over speed, safety over convenience, and humility over overconfidence. If the AI can’t figure out what to do, it should err on the side of doing less harm. And always leave room for a human override. As my grandmother always said: “a safe AI is a lobotomized AI – and trustworthy one”, because the only thing worse than an agent making mistakes is one that refuses to admit it doesn’t know better.

4. Ensure Interruptibility with a Reliable Kill Switch

Every agentic AI needs a big red “stop” button. If the Access Management Agent starts approving access requests like it’s handing out candy on Halloween, you need a way to shut it down—fast. The kill switch should work at any time, whether to stop a specific task, revoke recent changes, or take the whole system offline. Redundancy is key: multiple people, from admins to compliance officers, should have the authority to pull the plug.

But a kill switch isn’t just about stopping chaos; it’s about stopping it gracefully. The AI should have fallback procedures, like notifying users of delays or rolling back incomplete changes. Interruptibility isn’t just a safety feature, it’s a way to ensure your AI doesn’t become the next cautionary tale in the next episode of Darknet Diaries.

5. Make the AI Explain Its Actions

If an agentic AI is going to make decisions, it needs to own them—or at least explain them in a way humans can understand. The Access Management Agent should document every action it takes: who requested access, why it was approved or denied, and which policies were applied. These logs shouldn’t be buried in a data swamp either; they need to integrate seamlessly with tools your IT team already uses, like Splunk, ServiceNow, or Microsoft Purview. When something inevitably goes wrong, a clear audit trail turns “What just happened?” into “Ah, here’s exactly what happened.”

But transparency is only half the battle. Accountability means you can trace every decision back to a responsible party—whether that’s the AI, the developer who coded it, or the manager who approved its deployment. Assign unique identifiers to agent invocations and group logs emitted from one execution of the agent together using it and ensure all humans initiated or involved in its execution are properly attributed. When the CFO asks who gave the intern access to payroll, “The AI did it” isn’t an acceptable answer. An agent that logs its reasoning, explains its actions, and points to who’s accountable isn’t just easier to manage, it’s safer for everyone involved.

6. Watch the Logs, Watch the System

Agentic systems need someone—or something—watching them. Whether it’s a person or a tool, monitoring logs to ensure the system is doing what it should isn’t optional; it’s fundamental. Log monitoring isn’t unique to agentic systems—it’s just good practice for any enterprise environment. But with agentic systems, where autonomy and decision-making are baked into the design, having a reliable “watcher” is non-negotiable. The stakes are too high to leave these systems unchecked.

Platforms like Datadog or Elastic Stack (ELK) are powerful options for real-time log monitoring, offering tools to analyze, detect anomalies, and visualize data from agentic systems. If those feel like overkill or strain your budget, a simpler approach can be just as effective: create a standard operating procedure for a designated role to review an extracted sample of logs daily. This person should look for anomalies, unexpected patterns, or signs the system might be veering off course. It’s not flashy, but it works.

As for the idea of AI monitoring other AIs, it’s already happening. Some organizations are experimenting with secondary AIs designed to police the primary ones, like compliance officers for their agentic counterparts. While this might be the future of scaled AI oversight, I think it’s a gamble in production systems. Entrusting one autonomous system to manage another’s behavior introduces new risks and complexities. For now, this approach is better suited for experimental environments where failure is part of the plan, not a career-ending disaster.

De-Risking Agentic AI in the Enterprise

Deploying agentic AI systems into a corporate IT environment is no small task. These systems hold immense potential to transform how enterprises operate, but they also introduce unique risks that can’t be ignored. The principles I’ve outlined here, drawn from the work of my teams at BlueLabel, are not meant to be exhaustive or a definitive list of best practices. They’re a starting point, grounded in the practical realities of designing and deploying agentic systems in environments where governance, compliance, and trust are critical.

At BlueLabel, we’ve been in the trenches, navigating these challenges alongside our clients. The lessons shared here were learned the hard way—on the ground, solving real-world problems for enterprises trying to balance innovation with security and control. If you’re looking to harness the power of agentic AI without creating chaos, these principles are a solid place to begin. And when you’re ready to take the next step, we’re here to help you deploy systems that are not only powerful but also safe, reliable, and aligned with your enterprise’s goals.

Bobby Gill