How to use LangChain and MapReduce to Classify Text Using an LLM

In this post, I will walk through how to use the MapReduce algorithm with LangChain to recursively analyze a large set of text data to generate a set of ‘topics’ covered within that text.

The example uses a large set of textual data, specifically a set of Instagram posts written by a fertility influencer covering various reproductive health topics. Each post generally has a caption that numbers around 300-400 words. The goal is to scan all the posts to create a canonical set of reproductive health topics this corpus covers and output them to a file.

Note: all the source code used in this post is available to run at home in the BlueLabel AI Cookbook GitHub repository.

Input limits of modern LLMs

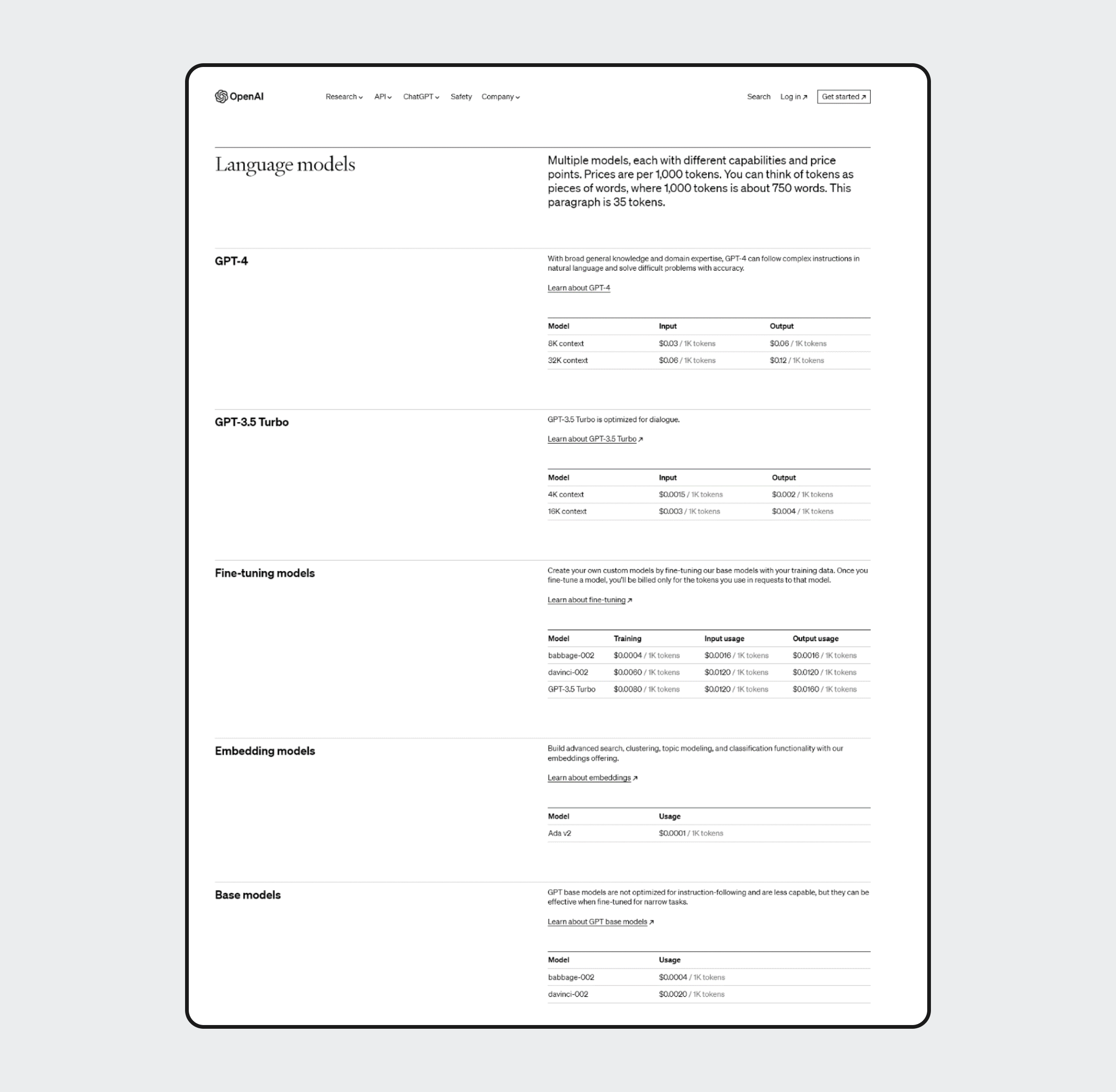

One of the challenges of summarization and classification type problems with LLMs is the limited size of input tokens that most LLM providers have in place. For example, OpenAI’s GPT-4 has an input token limit of 8K, while Anthropic’s Claude has a more airy 100K.

A quick look at token limitations and pricing for GPT systems. | Source: OpenAI

For simplicity, one can equate a token to a word. Thus, if there is a 4000 token limit, that means the largest prompt you can provide to GPT is about 4000 words long. So, in the problem we are trying to solve, this token limit would only allow us to pass in 5-6 post captions at a time.

Given that limitation, how can we create a unified set of topics across all Instagram posts? That’s where LangChain’s implementation of MapReduce comes in via the MapReduceDocumentsChain.

What is MapReduce?

MapReduce is an algorithmic approach that attempts to solve a problem by recursively solving the same problem but at a smaller scale (the map step) and then combining the solution outputs to solve the original problem (the reduce step).

For our example, we can use MapReduce to break the problem into the following steps:

- Map Step: We will go through each document one by one and derive topic classification on each post individually. The LLM will be used to create these classifications, which are unique to each Post.

- Reduce Step: After all documents have topics generated, we use the LLM to coalesce them into a single set of topics that are unique and conceptually different.

What is LangChain?

LangChain is a developer framework that makes interacting with LLMs to solve natural language processing and text generation tasks much more manageable. Often, these types of tasks require a sequence of calls made to an LLM, passing data from one call to the next, which is where the “chain” part of LangChain comes into play.

Learn more about Langchain by following the link above.

One of the chain types LangChain provides is a MapReduceDocumentsChain, which encapsulates the implementation of a MapReduce approach to allow an LLM to derive insight across a large corpus of text that spans beyond the single prompt token limit.

How to use LangChain’s MapReduceDocumentsChain

To use the MapReduceDocumentsChain to solve our problem, we will independently set up two smaller chains using the LLMChain object. One is for the map step, the second for the reduce step, and finally, create an Uber-chain (using the MapReduceDocumentChain object) to sequence the calls to the map and reduce chains.

Reading the Instagram posts captions from a CSV File

The first step in our process is to take a CSV file that contains a dump of all Instagram post captions.

We use Pandas to read the data and create a list of LangChain Documents from each post that stores in the doc_captions variable. By creating a list of Document objects, we do not need to manually split the text later, as the built-in chain types in LangChain will automatically run the ‘map’ step of the algorithm on each individual element of doc_captions list.

df = pd.read_csv(filename) captions = df['Caption'].iloc[1:] doc_captions = [Document(page_content=t) for t in captions]

Creating the map prompt and chain

The following prompt is used to develop the “map” step of the MapReduce chain. This prompt is run on each individual post and is used to extract a set of “topics” local to that post.

llm = PromptLayerChatOpenAI(model=gpt_model,pl_tags=["InstagramClassifier"])

map_template = """The following is a set of captions taken from Instagram posts

written by a Reproductive Endocrinologist, they are delimeted by ``` .

Based on these captions please create a comprehensive list of topics

relating to fertility, reproduction and women's health.

If a post does not relate to any of those broad themes,

please do not include them in the list of generated topics.

```

{captions}

```

"""

map_prompt = PromptTemplate.from_template(map_template)

map_chain = LLMChain(llm=llm,prompt=map_prompt)

Creating the reduce prompt and chain

The following prompt is for the “reduce” step of the algorithm. It operates against the entire set of output that is produced by the repeated execution of the “map” chain.

In our example, the map chain yields a set of topics that are defined on the Instagram post it was fed that are then grouped together and passed as a single result to the reduce chain. This reduce chain is designed to take the global output from the map step and reduce it to a final set of unique fertility topics that minimize contextual overlap.

In this final reduce prompt, we want the output to be a comma-separated list of values that we can use for further processing by non-LLM-enhanced code blocks. To ensure the LLM always returns a CSV list, we use LangChains’ CommaSeparatedListOutputParser, which adds its own set of prompt instructions (represented by the {format_instructions}) parameter to the call made in the reduce step to the LLM.

reduce_template = """The following is a set of fertility, reproduction and women's health

topics which are delimeted by ``` .

Take these and organize these into a final, consolidated list of unique topics.

You should combine topics into one that are similar but use different variations of

the same words.

Also, you should combine topics that use an acronym versus the full spelling.

For example, 'TTC Myths' and 'Trying to Conceive Myths' should be a single topic called

'Trying to Conceive Myths'.

{format_instructions}

```

{topics}

```

"""

output_parser = CommaSeparatedListOutputParser()

format_instructions = output_parser.get_format_instructions()

reduce_prompt = PromptTemplate(template=reduce_template,

input_variables=["topics"],

partial_variables={"format_instructions":format_instructions})

reduce_chain=LLMChain(llm=llm,prompt=reduce_prompt)

Creating the MapReduceDocumentsChain

The final step is to use the map and chains to create a single instance of the MapReduceDocumentsChain object and then runs it.

The MapReduceDocumentsChain takes as an input:

- llm_chain: this is the “map” chain we defined earlier on.

- reduce_documents_chain: this is an instance of the ReduceDocumentsChains object which wraps the more complex logic of the “reduction” step. As part of creating the ReduceDocumentChain you specify which chain to use to combine (“reduce”) the output of the map step. As the more astute reader might guess, ReduceDocumentsChain is linked to the ‘reduce’ chain we defined earlier.

- document_variable_name: this is the name of the input variable in our ‘map’ chain, which contains the text corpus that is to be recursively evaluated in the ‘map’ step. If you look at the prompt we use in the ‘map’ step, you will see it is expecting an input parameter named ‘{captions}’. When the chain is run, a sequence of calls is made to the ‘map’ chain whereby the {caption} parameter contains a small subset of the entire text that is to be analyzed.

combine_documents_chain = StuffDocumentsChain(

llm_chain=reduce_chain, document_variable_name="topics"

)

reduce_documents_chain = ReduceDocumentsChain(

combine_documents_chain=combine_documents_chain,

collapse_documents_chain=combine_documents_chain,

token_max=4000)

map_reduce_chain = MapReduceDocumentsChain(

llm_chain=map_chain,

reduce_documents_chain=reduce_documents_chain,

document_variable_name="captions",

return_intermediate_steps=False)

Running the full MapReduce chain

In this step, we run the MapReduceDocumentsChain that triggers the execution of the entire map-reduce algorithm, along with the sequence of LLM calls necessary to solve it.

The MapReduceDocumentsChain processes all of the post captions that were read in from the CSV file and performs the “map” step on each of them. Once the “map” step is completed for all read captions, it runs the final “reduce” step and outputs a final list of topics in a comma-separated list.

output = map_reduce_chain.run(doc_captions) new_topics = output_parser.parse(output)

Conclusion

The map-reduce capabilities in LangChain offer a relatively straightforward way of approaching the classification problem across a large corpus of text. By leveraging the MapReduceDocumentsChain, you can work around the input token limitations of modern LLMs to build applications that can operate on any sized body of input text.

All the code referenced in this post is available in the BlueLabel AI Cookbook and able to run via Jupyter Notebook that leverages PromptLayer: a neat tool allowing you to see the exact prompts going into and out of the LLMs when your code is run (you will need to at least create a free account). I encourage you to run the notebook and then watch the sequence of LLM calls that show the MapReduce algorithm at work in PromptLayer.

Bobby Gill