What is LangChain: How It Enables Businesses to Do More with LLMs

Today’s LLMs (Large Language Models) can help businesses unlock more productivity than ever, but there are some limitations – all systems are bound by training data cut-off dates and generally lack extensibility between web resources and other language models.

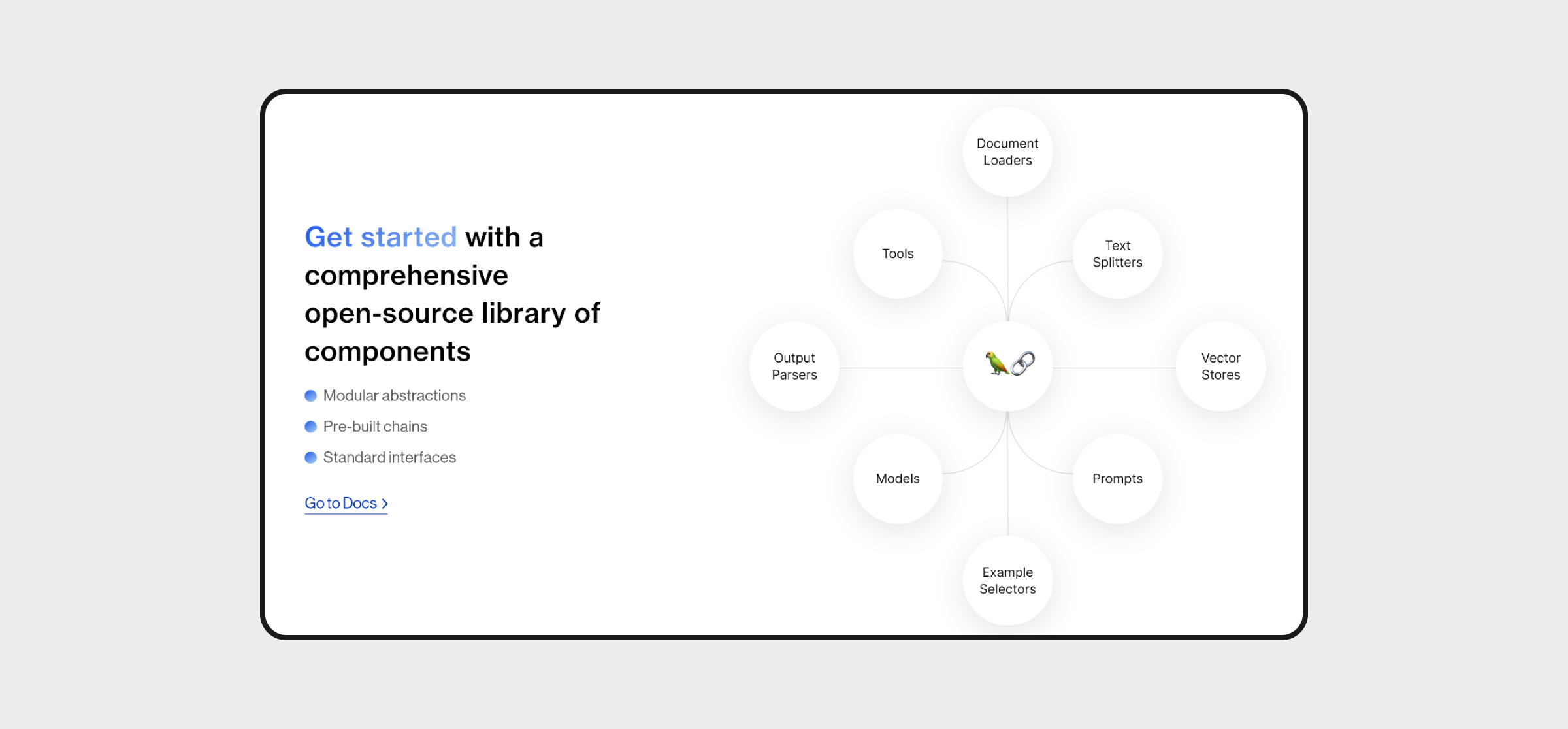

For better interoperability across the board, LangChain’s robust framework offers various valuable tools that allow businesses to connect to different vendors (including other LLMs) and integrations with a comprehensive collection of open-source components.

Here, we will examine this product and explain its capabilities, plus provide an example demonstrating a sliver of what it can do.

What is LangChain?

LangChain is a framework designed to simplify the creation of applications using LLMs. Its use cases largely overlap with LLMs, in general, providing functions like document analysis and summarization, chatbots, and code analysis.

Being agentic and data-aware means it can dynamically connect different systems, chains, and modules to use data and functions from many sources, like different LLMs. | Source: LangChain

LangChain offers access to LLMs from various providers like OpenAI, Hugging Face, Cohere, AI24labs, among others. These models can be accessed through API calls using platform-specific API tokens, allowing developers to leverage their advanced capabilities to build as they see fit.

Businesses of any size, from startups to global enterprises, can use LangChain to create multilingual chatbots, language translation tools, and even multilingual websites.

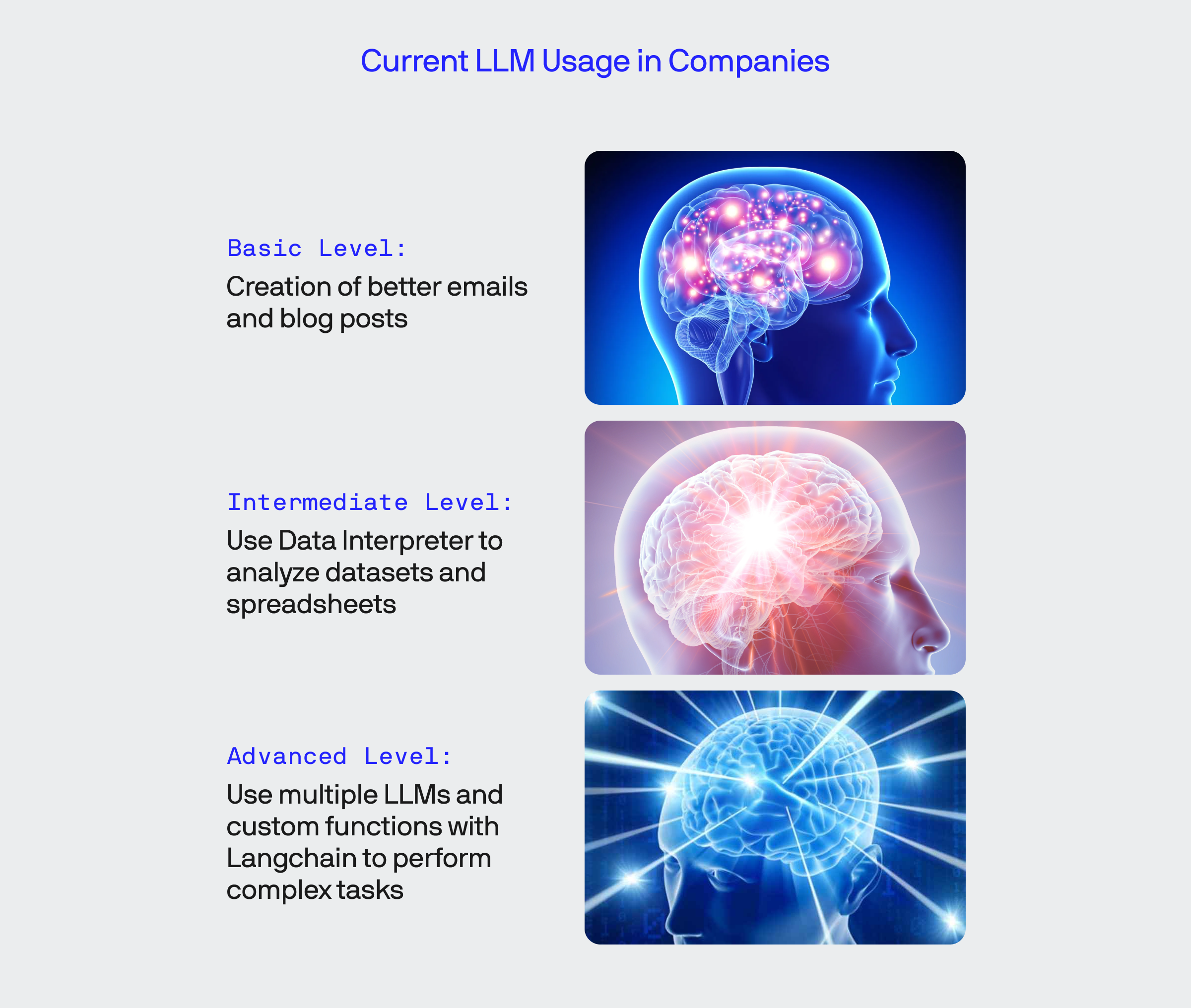

The value here is that with a bit of code, you can do significantly more than, for example, logging into your OpenAI account and making ChatGPT analyze a CSV file you manually exported from some database. To demonstrate, I made a meme that succinctly explains the hierarchy of current LLM use.

Out-of-the-box solutions built using Langchain and similar solutions will enter the market soon, but you’ll need coding knowledge to create custom solutions.

Though some solutions on the market, like Bing running ChatGPT, can pull in data from referenced websites, its capabilities have significant limitations with what can be with external data and functions.

How does LangChain work?

Langchain essentially serves as a middleman between other LLMs, data sources, and API-accessible functions – more precisely, it’s a comprehensive open-source library of components that allow users to build better (i.e., more custom) solutions by interconnecting different resources.

By adding LangChain to your product, you can take advantage of LLM chaining (we’ll show why this can be useful later), as well as several other kinds of integrations and toolsets for parsing and manipulating data.

Different API functions allow the passing of data generated or extracted between other components, whether to storage, another system for further analysis, to compute in a function, or any of many other use cases.

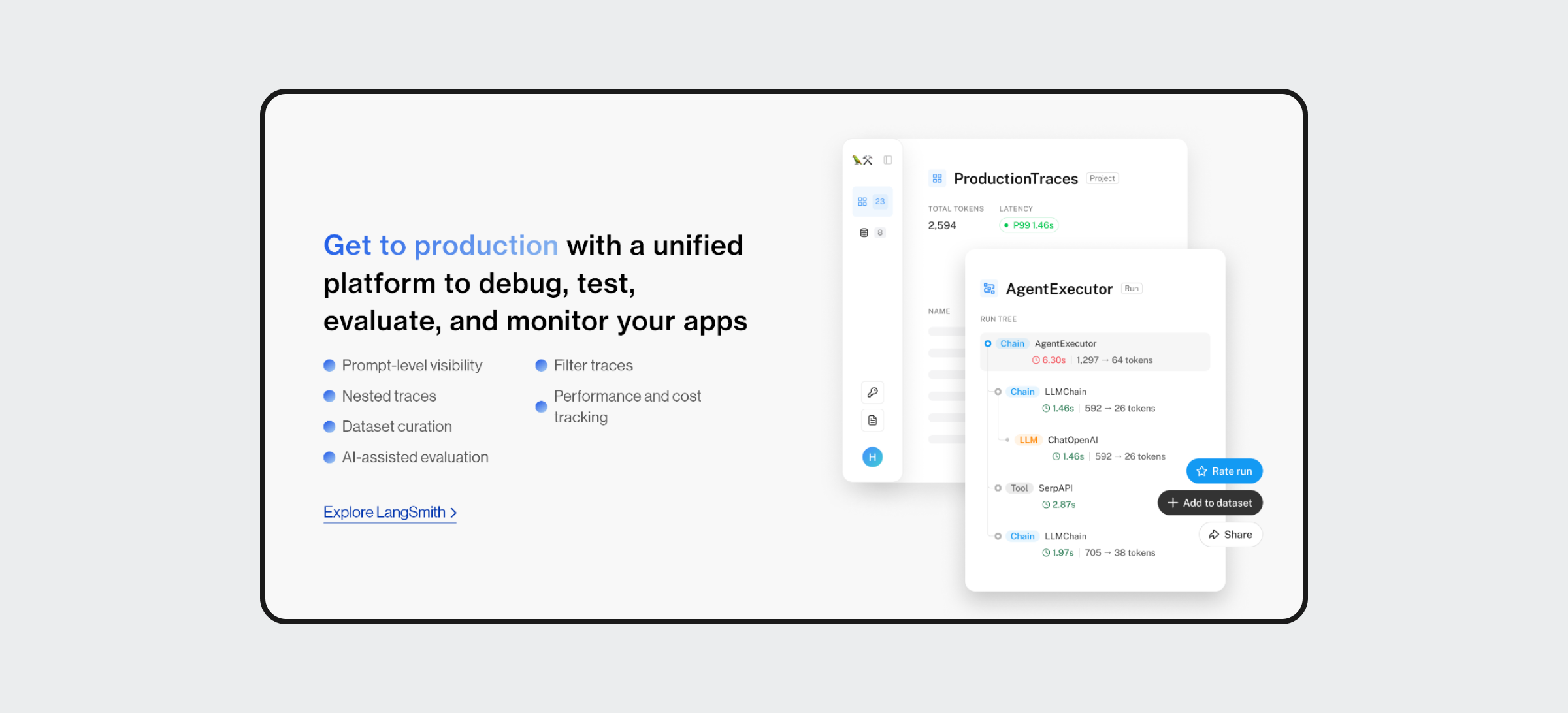

Coming soon: LangSmith. | Source: LangChain

The folks at LangChain are currently in the process of creating a full-fledged development platform called LangSmith that will offer deep, real-time insights into the product with complete chain visibility. Right now, devs need to test anything not working correctly manually – soon, this full suite of debugging tools to test, evaluate, and monitor will make development with LangChain much smoother.

A of language-centric examples of what you can do with LangChain

Many examples are in the works, so we’ll touch on a few valuable use cases where language manipulation is the central theme.

Localizing & translating web or app content

One problem global brands face is creating meaningful sites for audiences in other locales – it is often time-consuming and expensive to launch derivative sites and fill them with content that doesn’t look like someone “did their best” using Google translate.

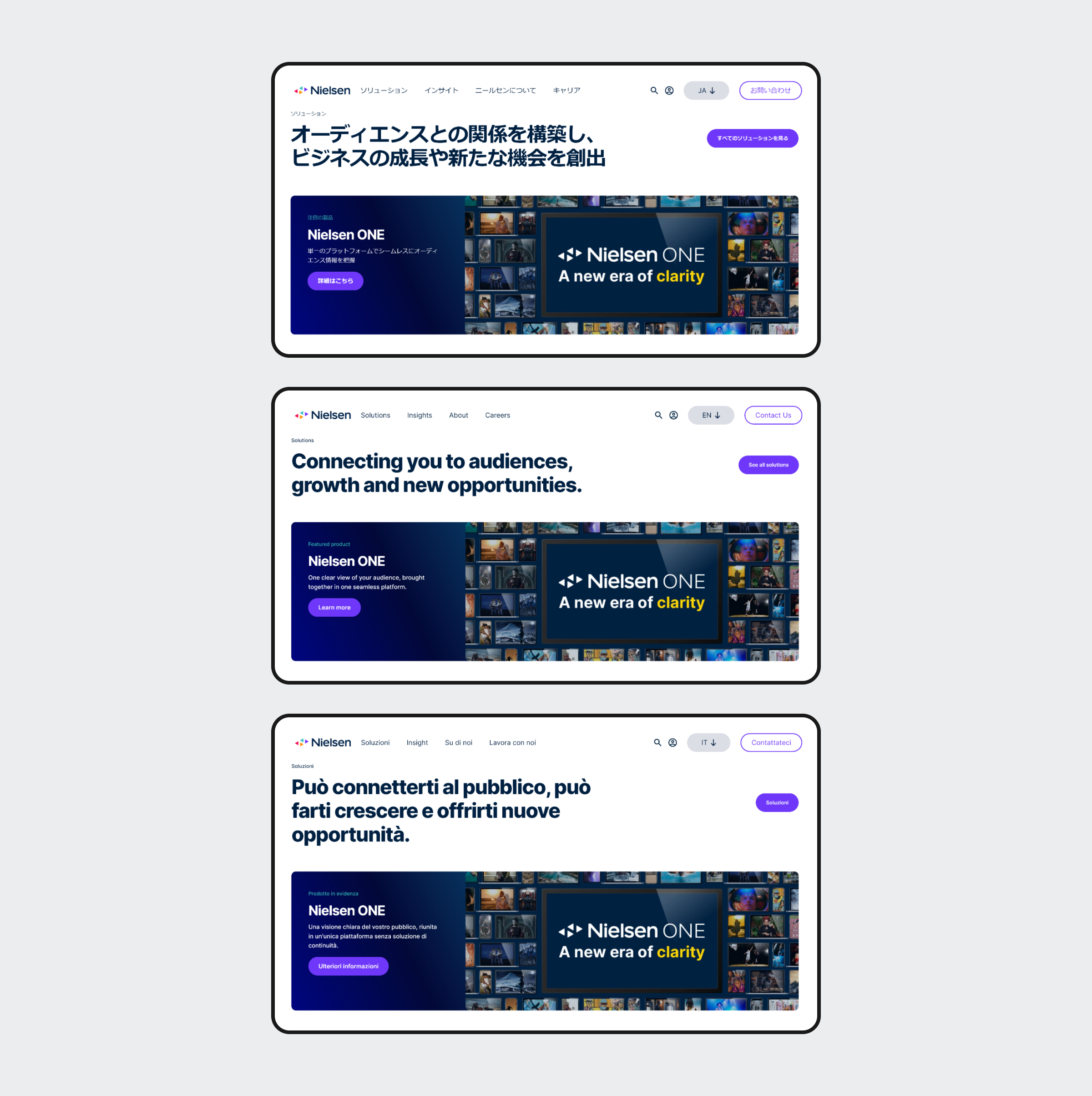

This example from the data analytics company Nielsen shows how they deliver a consistent experience for users and prospects in different regions around the globe. | Source: Nielsen

Building functional, engaging sites for locales other than your own takes much more work than simply translating text to another language and pasting it to a mirror site.

For example, different phrases in other languages don’t have word-for-word translations. Even when they do, character lengths can be radically different, meaning the UI layout needs to be adjusted to avoid issues with text overflowing or not adequately filling an area.

Though Nielsen used a solution from Weglot to translate their content, this is an ideal job for LangChain.

Using LLM chaining and other tools, you could automate most of the processes needed to translate content into other languages and publish the revised material appropriately to a localized site.

There’s enough flexibility to allow manual translations to be streamed alongside (or in place of) LLM translations, which will often be necessary as with any AI, errors can happen. This can help ensure that awkward or sloppy translations due to character length disparities don’t arise, as we see in the Nielsen example.

Specifically, let’s look at the English and Japanese translations: the actual wording is slightly different as the gerund “connecting” in the headling would directly transpose to a more prepositional phrase, plus it’s a word you wouldn’t typically use when discussing people. Instead, the Japanese phrase headline translates to something closer to “Build relationships with your audience (to) create business growth and new opportunity.”

Using LangChain, you can create layers of LLM analysis tools to filter translations and rework phrasing appropriately. With most CMS solutions and custom web applications, you can further create custom scripts to automate most content publishing from a database or vector store to a website.

Creating a bot for social media that can post just like you

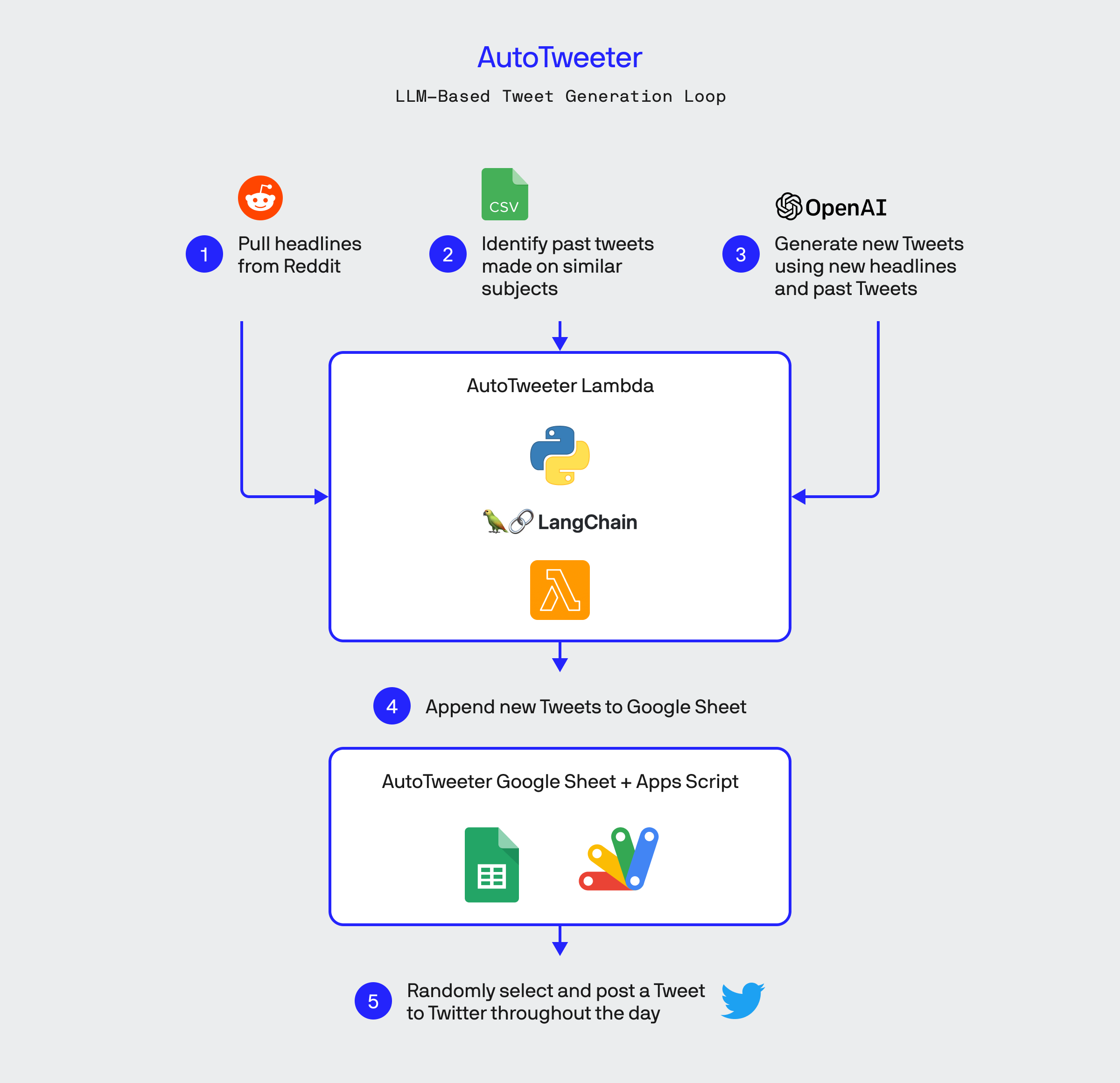

Social media already has enough bots engaging with people as if they’re real people, but for the sake of making a functional example, our co-founder Bobby created a bot called AutoTweeter for the social media platform we all still call Twitter.

The system runs GPT-4 inside an AWS Lamba container, where it reads from and outputs to a Google Sheet used as a rudimentary database.

AutoTweeter uses a combination of LangChain to utilize a sequence of three LLMChains that check his existing tweets against headlines pulled from the r/worldnews to autonomously create and publish humorous, non-offensive tweets in his tone.

While you could use this same technique to pollute social media with your very own irreverent bot, there’s a broader implication for business behind this proof of concept.

Chatbots have incredible applications in customer service, often providing a frontline for support and intake. However, these products must be flexible to handle the full scope of problems or scenarios a customer might require when using such a system.

We’ve likely all encountered chatbots just as bad as poorly configured phone auto attendant and IVR systems that waste your time before transferring you to someone who may or may not be “the one” to solve your issue.

Rather than creating a bot to tweet in your tone on your behalf about your love for processed snacks, hockey, and political happenings, you could train such a system on your brand voice, knowledge bases, and other resources to create a highly valuable feature for your digital touchpoints.

Similar setups to AutoTweeter would allow businesses to parse past and present customer queries to understand drop-off points and build the appropriate flows to solve customer problems on the spot, instead of spitting them into a queue to wait for a human and plot how they’re going to steal from your business.

Here, you essentially create a curated ML system that’s tailored to your brand with specific issues and requests from your customers – with a little bit of dev magic, you can quickly build a solution that integrates features like payment systems, tech support, ordering, account management, and so much more.

As a caveat, understand that modern AI is still relatively new across the board, and unattended automation is not advisable for anything. Remember that AI works best when used as a productivity multiplier and not an outright replacement for a human.

Use LangChain & LLMs to enhance productivity.

There are copious solutions already in the works using sequences of LLMs to do tasks that a single system can accomplish independently.

Stay tuned as we continue to cover the emerging technologies in the AI and ML space.